2D/3D Image Acquisition with Data Fusion

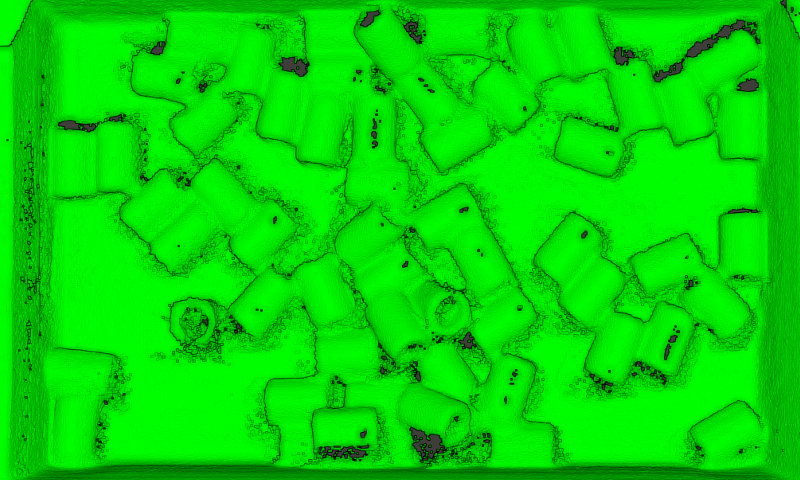

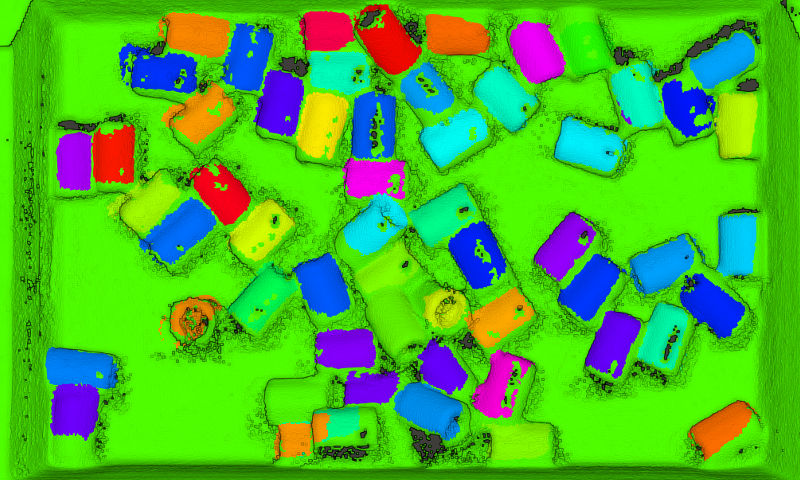

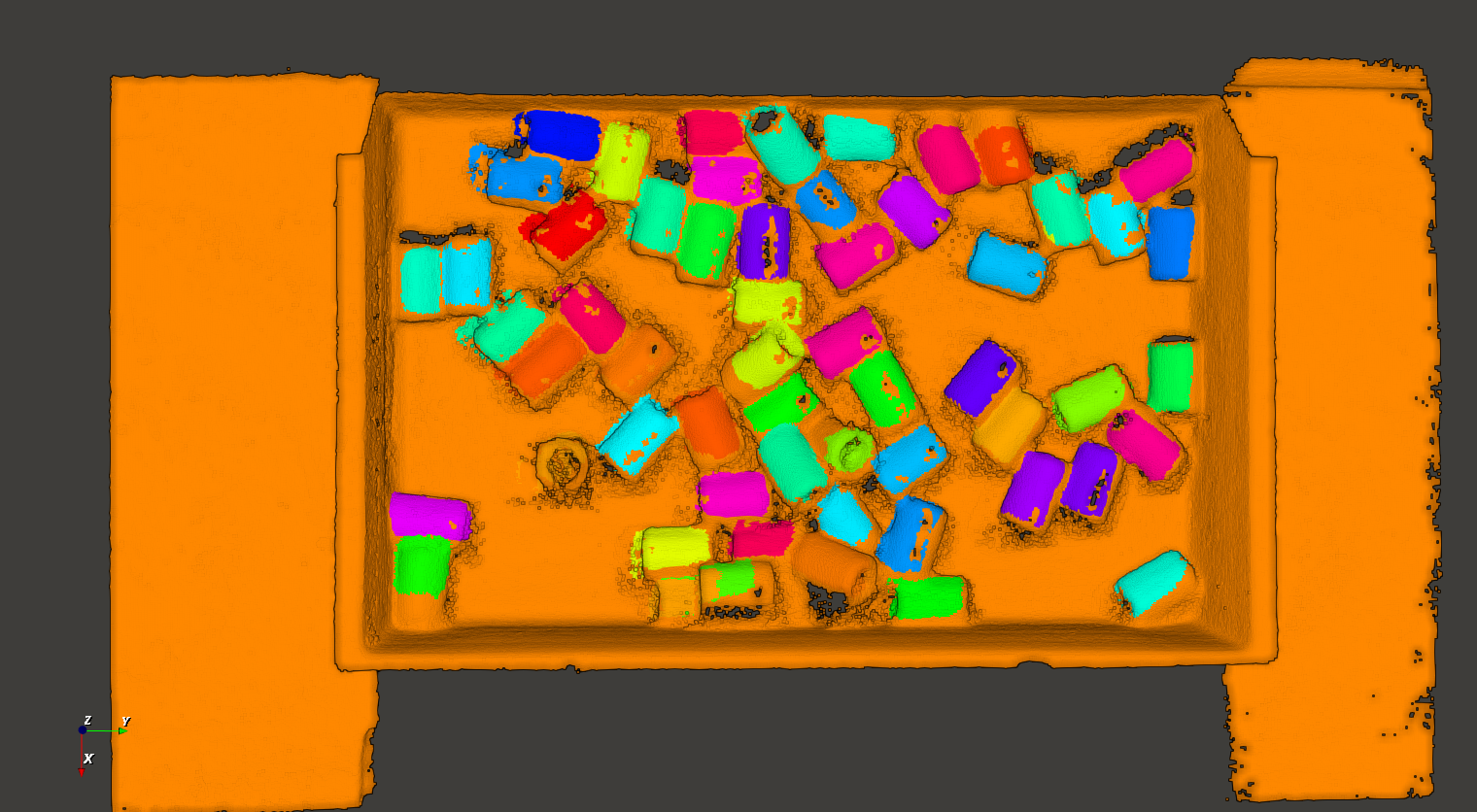

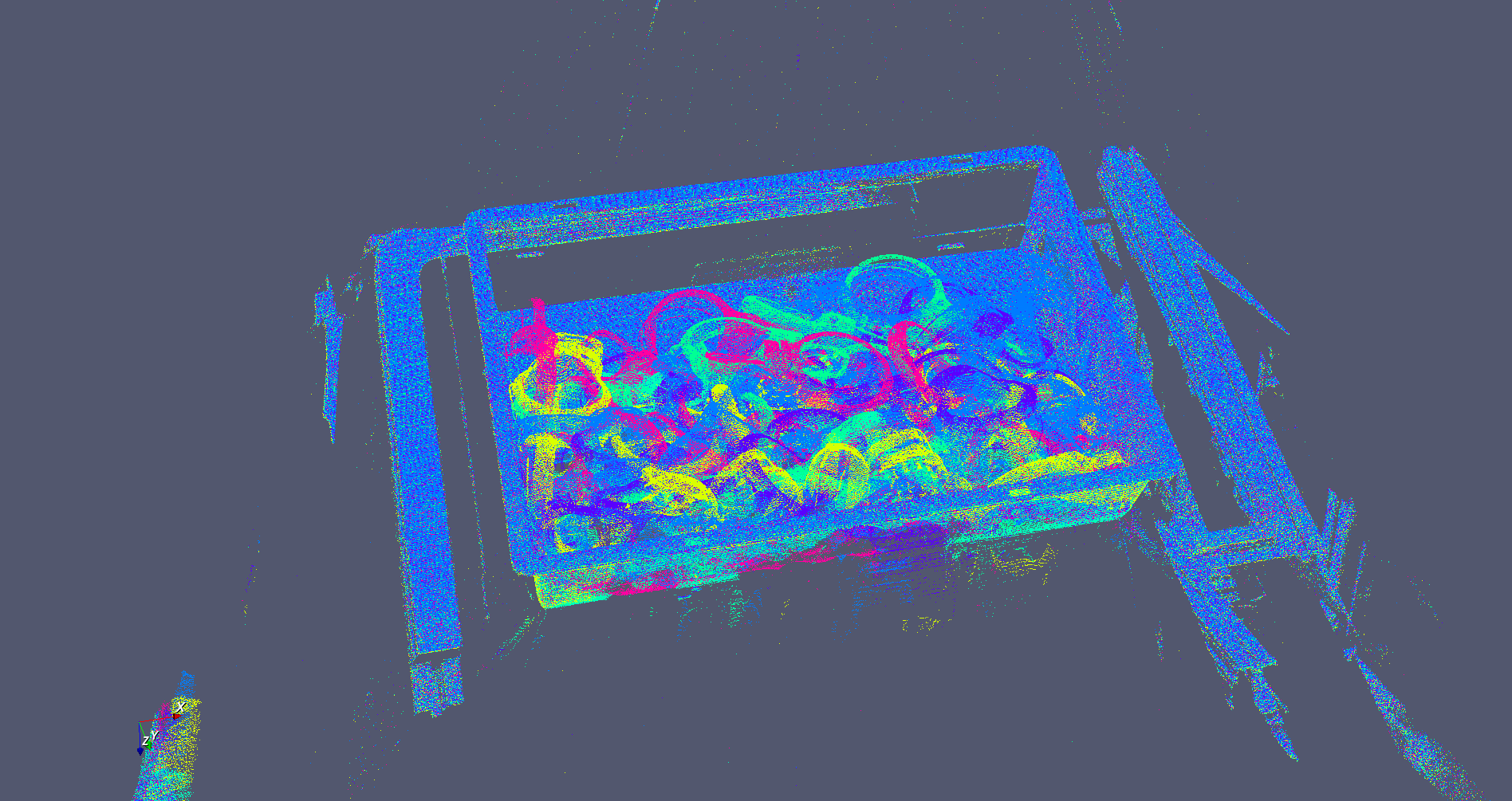

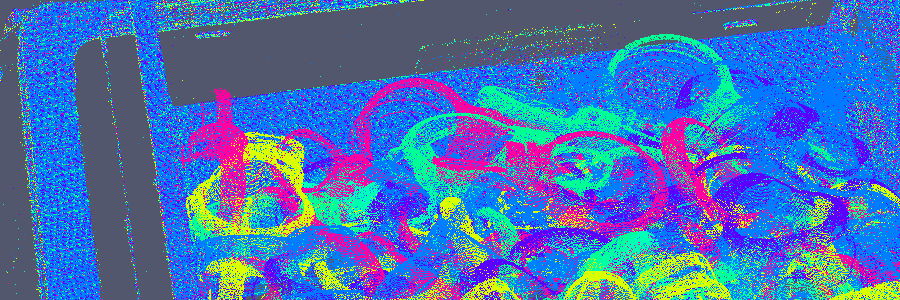

A combined 2D / 3D sensor (Intel Realsense) mounted on the robot arm is used to capture 2D color images and a fused depth image of the entire box. The 3D image acquisition is performed from the moving arm, thus it is possible to fuse the images from several different angles and camera positions to a comprehensive 3D image of the objects.

This type of mounting also eliminates the need for an external fixed sensor that could restrict the arm’s movements.

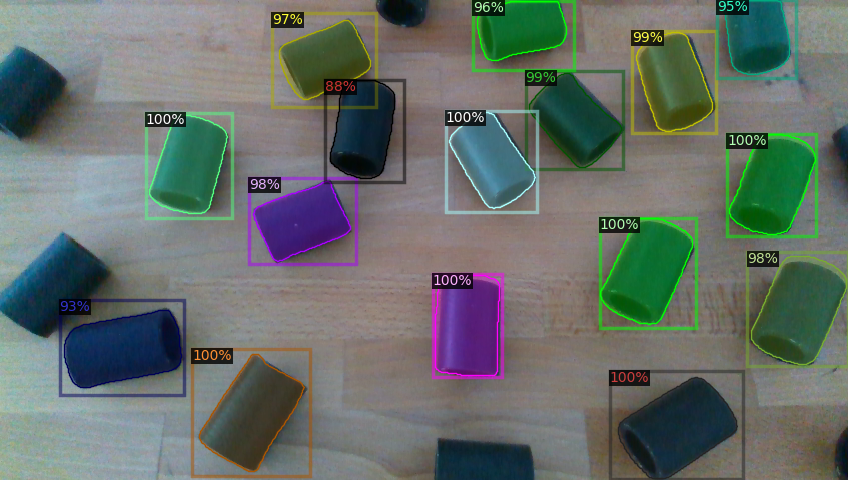

AI Supported Object Detection and Localisation

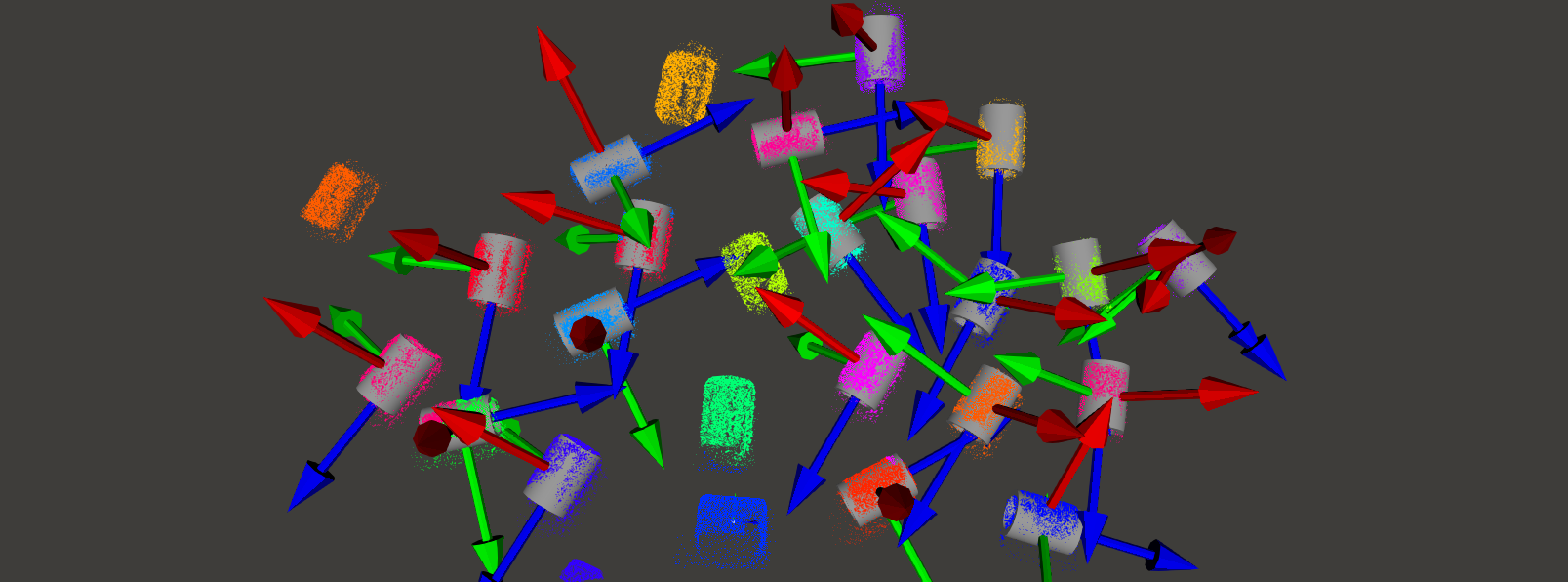

Trained artificial intelligence methods recognize the objects in the 2D image and pre-segment it. The positions determined in this way are then used to initialize the detection of the objects in the 3D depth images. The exact localization of the objects is determined by comparing the 3D image at these positions with a model of the objects. This comparison is repeated iteratively, thus the detected position of the object can be continuously improved. The initial start positions determined by AI methods accelerate this object recognition step and can lead to qualitatively better results.

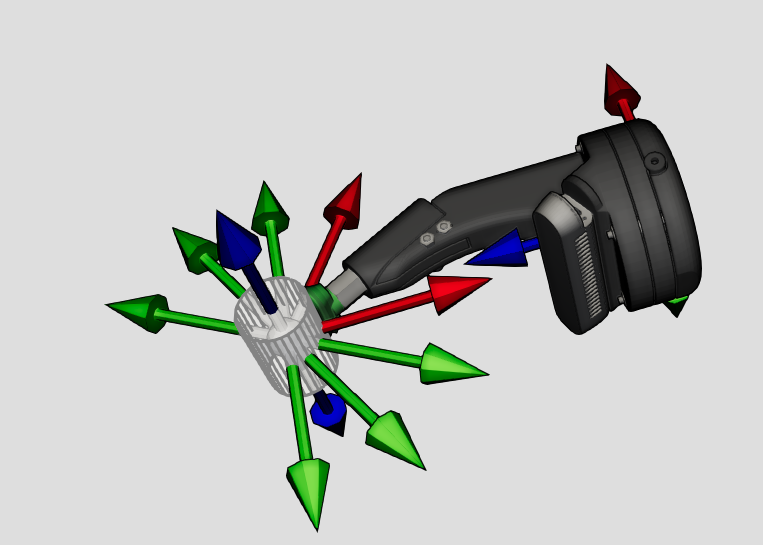

Motion and grasp planning

A gripping movement can be planned with the now known object positions and gripping points The tool used and the surrounding environment of the objects suggest arm positions from which the object can be picked up safely and without collisions. Objects that are easy to reach are grabbed first. Objects that are partially hidden by other objects are thus exposed over time and become more and more accessible.

The robot arm movements are checked in advance for possible collisions using the environmental information generated by the sensor system. By using full volume models of the robot and obstacles, safe and collision-free robot motion can be planned.

This trajectory planning takes place entirely in DRS and uses the robots own kinematics models, thus a motion can be planned for robot arms of all manufacturers regardless of robot types and their concrete planning capabilities.

Execution on the robot

The individually calculated arm movement is transmitted from the system directly to the robot arm for execution. In this process, the planned robot movement is sent piece by piece to the real robot arm and executed in a monitored manner. Differences in the capabilities of arms from different manufacturers are compensated, the end user does not have to create manufacturer-specific programs.

After picking up an object from the chaotic crate, it can be selectively fed into e.g. assembly or machining processes in its now precisely known position, or it can be placed again in an orderly manner.